The Importance of A/B Testing in Publisher Revenue Optimisation

Summary:

The Author is former Partner Technology Manager, Sales Engineer and Account Manager at Google and YouTube. He is a Director & Co-Founder at Adottimo, a specialist programmatic agency based in London. He presents A/B testing as a practice of publisher revenue optimization, which brings the field more inline with a broader evidence-based practice approach. He presents a few examples of why A/B testing important in publisher revenue optimization using case studies from companies such as Google and Netflix.

Introduction

I come from an Engineering (Electronic/Electrical), IT, Telecom and FinTech background, so my approach to publisher optimization tends to be scientific, specifically based on the “Scientific Method” – the most prevalent form of evidence based empirical testing used in the natural sciences. The Scientific Method has withstood the test of time, remained The Gold Standard empirical method of knowledge acquisition, and led the rapid development of natural science (in every natural scientific field) since at least the 17th century.

In some cases, many assumptions are made in online Publisher Revenue Optimization that take into account preferences, comparison with traditional print and TV media industry, etc., as an approach to publisher revenue optimization. Although those with sufficient experience are able to deduce specific themes, general best practice, etc. from extensive experience, to be most effective A/B testing should be performed continuously and the companies cited in this article use A/B testing as an ongoing process.

Publisher Revenue optimization has very simple goals, such as: “Which are the best performing Ad Unit sizes for this viewport size?”, “Do Responsive ad units actually increase my earnings?”, “Does Dynamic Allocation actually increase my revenue yield?” or some slightly complex ones “What is the Optimal Min CPM for this ad unit in AdX?”. However, the questions are never ambiguous – since the motives are usually simple – “Does this make me more money!?” Luckily enough the answer to the last question is always binary.

This article is a guide (introduction) to those with limited experience in the field, or even the more initiated who are not yet convinced in the value of A/B testing. It provides some context using examples for why this type of empirical data acquisition/correlation inference – which in the context of publisher revenue optimisation tends to mostly be A/B testing – is important. This article is intended for a broad audience – if you’re receiving under a few million pageviews/month, or receiving hundreds of millions, technical or non-technical, business focused or not, the case studies apply regardless.

Modern Era – Why Should we Care?

Case Study 1: Google Search Ads

I’ll begin with a simple anecdotal and well known story involving Google’s former outspoken VP for Search, Marissa Mayer (employee Number 20 and Google’s first female engineer)[4] who later became President & CEO of Yahoo!

Marissa Mayer, then Vice President of Google Search Products and User Experience (the former “gatekeeper” of the Google Homepage), famously led a Google test which generated $200M in revenue per year for Google using simple A/B testing only [4]

This test involved testing 41 different shades of blue advertising links in Gmail and Google search – and measuring which links and variations of different shades of blue generated the highest Click Through Rates (CTRs), and hence, revenue.

As trivial as color choices might seem, clicks are a key part of Google’s revenue stream, and anything that enhances clicks means more money.

Google set the color of the blue links according to the then highest CTR variation and were then able to generate an extra $200m a year in revenue from this single A/B testing experiment only!

According to a statement in The Guardian by then Google UK MD, Dan Cobley[4]:

“As a result we learned that a slightly purpler shade of blue was more conducive to clicking than a slightly greener shade of blue, and gee whizz, we made a decision.”

When measuring empirically verifiable phenomenon, the facts cannot be disputed. A scientifically sound and statistically significant A/B test on, as in the previous example, which color link produces a higher CTR, is extremely difficult to disprove. Each of the 40 variations was tested from a statistically significant sample size of 2.5% of the Google’s user’s base – millions of people per variation!

Context on Google’s Advertising Scale:

Alphabet (Google’s Parent company) is the largest “media company” in the World (by Advertiser Revenue), with over €81.97 Billion of earnings in 2017 from Media spend, ahead of 2nd place Comcast (€72.64 Billion), and 3rd place The Walt Disney Company (€50.26 Billion.)[9]

A/B Testing

An A/B Test is a controlled experiment which tests two variants (variant A, vs variant B), to measure the change (difference/delta) in the value of a metric (or variable). This metric may be (for instance in the Online Publishing industry): CTR rate, Pageviews, Sessions per user, Bounce Rate, etc. One of the variants (say variant A, is used as a control.)

In the case of the Google example cited earlier, the metric tested was the change in CTRs and the 40 different variants tested (in separate tests) were the different hues of blue advertising links. This a form of statistical hypothesis testing or “two-sample hypothesis testing” as used in the field of statistics[5]. Experimenters can utilize the Scientific Method to form a hypothesis of the sort “If a specific change is introduced, will it improve key metrics?”, evaluate their test with real users, and obtain empirically verifiable results.

A Harvard Business Review article in 2017, Titled “The Surprising Power of Online Experiments” stated The Major leading IT companies, Google, Amazon, Booking.com, Facebook, and Microsoft – each conduct more than 10,000 online controlled experiments annually, with many tests engaging millions of users [5]. Having worked within Google, I can say that their experiments can involve up-to Billions of users! It also goes on to mention Start-ups and large companies without digital roots, such as Walmart, Hertz, and Singapore Airlines, also run them regularly, though on a smaller scale. These organizations have discovered that an “experiment with everything” approach has surprisingly large payoffs[5].

As another example, in 2000, Google initially used A/B test to ascertain the optimal number of Search Engine Results to show per page. A/B tests have become vastly more complex since the early days with mulitvariable/multinomial testing becoming the norm.

Case Study 2: Netflix

In a popular case cited by DZone, 46% of respondents surveyed by Netflix said that making titles of Netflix content available to browse was the 1 thing they wanted to know before signing up for the service. So, Netflix decided to run an A/B test on their registration process to see if a redesigned registration process will help increase subscriptions.

Netflix Survey

Netflix created the new design which displayed movie titles to visitors before registration. The Netflix team wanted to find out if the new design with movie titles would generate more registrations compared to the original design without the titles, as users had requested. This was analyzed by running an A/B test between the new designs against the original design.

Hypothesis

Hypothesis:

The Test’s Hypothesis was straightforward: Allowing visitors to view available movie titles before registering will increase the number of new signups.

In the A/B test the team introduced 5 different variants against the original design. The team then ran the test to see the impact.

Results:

The original design consistently beat all challengers. Completely contrary to what 46% of visitors were requesting!

So, why did the original design beat all new designs although 46% of visitors said that seeing what titles Netflix carries will persuade them to sign up for the service?

We know that demonstrating value early in the onboarding process is a fundamental principle in the onboarding process. So how is it that one of the most data driven companies in Silicon Valley doesn’t do this?

Conclusions:

Netflix attributes the success of the original design vs the variants as follows:

1) “Don’t confuse the meal with the menu.”

“Netflix is all about the experience,” says Anna Blaylock, a Product Designer at Netflix wondered this too when she first started at the company. Just as a restaurant dining experience isn’t solely about the food on the menu, Netflix’s experience isn’t just about the titles[8].

2) Simplify Choices

Each of the variants built for the tests increased the number of choices and possible paths for Netflix visitors to follow. The original only had one choice: Start Your Free Month. Blaylock says that the low barrier to entry outweighs the need for users to see all their content before signing up.

3) Users Don’t Always Know What They Want

While survey had shown that 46% of users wanted to browse titles before signing up for Netflix, the tests proved otherwise. Testing reveals our assumptions. That’s why it’s so important to run tests and trust the data.

Anna Blaylock left her interviewers with a good quote:

“Your assumptions are your windows on the world. Scrub them off every once in a while, or the light won’t come in”– Isaac Asimov

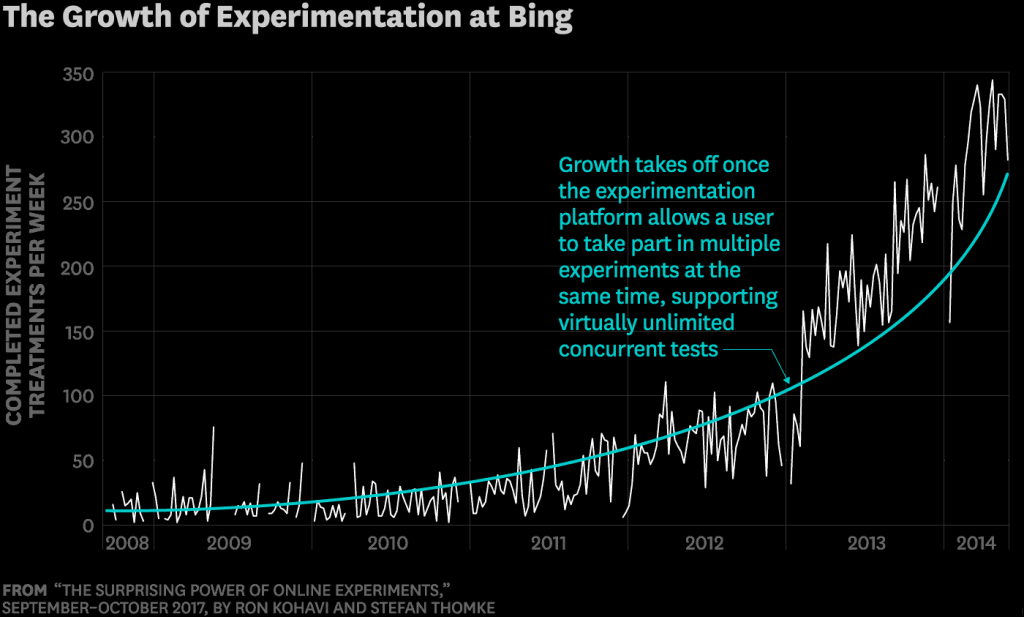

Case Study 3: Microsoft – Bing

The Harvard Business Review article (cited earlier [5]) attributes about 80% of proposed changes at Bing are first run as controlled experiments [5].

It highlights as a first case that in 2012 a Microsoft employee working on Bing had an idea about changing the way the search engine displayed ad headlines. Developing it wouldn’t require much effort—just a few days of an engineer’s time— but as with a company of that scale – it was one of hundreds of ideas proposed.

This is something at the time the program managers deemed it a low priority. So it languished for more than six months, until an engineer, who saw that the cost of writing the code for it would be small, launched a simple A/B test—to assess its impact. Within hours the new headline variation was producing abnormally high revenue, triggering a “too good to be true” alert.

These alerts are usually used in Companies of the scale of Microsoft for detecting when something goes terribly wrong (or very right) – it’s a form of anomaly detection. Usually, such alerts signal a bug, but not in this case. An analysis showed that the change had increased revenue by an appreciable 12%—which on an annual basis would come to more than $100 million in the United States alone —without hurting key user-experience metrics! It was the best revenue-generating idea in Bing’s history, but until the test its value was underappreciated [5].

The article mentions that “Microsoft’s Analysis & Experimentation team consists of more than 80 people who on any given day help run hundreds of online controlled experiments on various products, including Bing, Cortana, Exchange, MSN, Office, Skype, Windows, and Xbox.”[5]

Miltivariate Testing

Multivariate testing or multinomial testing is similar to A/B testing, but may test more than two versions at the same time or use more controls. Simple A/B tests are not valid for observational, quasi-experimental or other non-experimental situations, as is common with survey data, and other, more complex phenomena [7]. Such cases are more likely to draw “incorrect results” using seemingly “sound math”, but the wrong formulas!

Note that simple linear regression and multiple regression, are not usually considered to be special cases of multivariate statistics because the analysis is dealt with by considering the (univariate) conditional distribution of a single outcome variable given the other variables. Multivariate statistics already has more than 18 well established models each with its own analysis.

Closing Remarks on A/B testing

I was fortunate to have personally met Marissa Meyer (then Vice President of Google Search Products and User Experience), I’ve learned a lot of lessons from her legacy, worked with some of her Product Managers at Google, worked with publishers of all sizes and strategic importance in Google’s Publisher team, as well as learn first-hand and on the job from a number of really top-notch coaches – some of the best in the world at Publisher Optimization.

The cases presented are lessons that serve as a non-technical overview to A/B testing, which is part of a larger publisher growth strategy. We understand that every business is unique and has different goals and objectives, skills and resources, budgets and audience.

Google’s Parent Company Alphabet is now and has been for at least 2 years, the largest “media” company in the World (by Advertiser Revenue), with over €81.97 Billion of earnings in 2017 from Media spend, ahead of 2nd place Comcast (€72.64 Billion), and 3rd place The Walt Disney Company (€50.26 Billion.) A breakdown on the earnings per company are available on this Statista Report.

As more and more advertising budgets are moving online it is increasingly important (especially for traditionally offline publishers) to focus on growing your online publisher revenues. However, it requires more sophisticated approaches (Programmatic RTB, Dynamic Allocation, Preferred Deals, Private Auctions, etc.) in addition to just A/B and Multivariate testing.

If you have read this post so far and are in the publishing business, we would love to hear from you. Please feel free to visit our website to contact us for a preliminary consultation.

About the Author:

Tangus Koech

Tangus is a former Partner Technology Manager, Sales Engineer and Account Manager at Google and YouTube. He is a Director & Co-Founder at Adottimo, a specialist programmatic agency based in London.

During his last year in Google he managed very strategic media technology partners which contributed additional revenue of over US$ 700 Million in YouTube Advertising revenue annually, based in the US, United Kingdom, Germany, Japan, France and Netherlands – amongst Google’s highest revenue generating countries.

Tangus earned his stars as a monetisation expert during nearly 7 years at Google, working with publishers in both Developed and Emerging Markets. He made amongst the first Million-Dollar publishers in the African continent.

He is a Programmatic Expert in Google AdSense, DFP and AdX. Within Alphabet GOOG (NASDAQ), Google’s Parent Company, Tangus’ team – the Partner Solutions Organization – was responsible for accounts which collectively generated approximately 90% of all Alphabet’s Google/YouTube publisher revenue.

Some of his partners include WPP Group plc (UK), Opera Software ASA (Norway), Vodafone Group (UK), Orange Group SA (France), Endemol B.V. (International), GfK (Germany & Benelux), Intage (Japan). In Emerging Markets he has managed accounts for, among others, Comcast NBCUniversal (International), DStv (South Africa), eNews Channel Africa (eNCA, South Africa), Nation Media Group (Kenya), Standard Media Group (Kenya.)

References:

[1] “Ancient Egyptian Medicine”, Wikipedia Online Encyclopedia

[2]“Edwin Smith papyrus (Egyptian medical book)”. Encyclopedia Britannica (Online ed.). Retrieved 1 January 2016.

[3] “Edwin Smith papyrus”, Wikipedia Online Encylopedia

[4] “Why Google has 200m reasons to put engineers over designers”, The Guardian News and Media Limited, 5th February 2014.

[5] “The Surprising Power of Online Experiments”, Harvard Business Review, September-October 2017 Edition.

[6] “Encyclopedia of Machine Learning and Data Mining” (PDF), Kohavi, Ron; Longbotham, Roger (2017). “Online Controlled Experiments and A/B Tests”. In Sammut, Claude; Webb, Geoff. Springer.

[7] “A/B Testing”, Wikipedia Online Encyclopedia

[8] “The Registration Test Results Netflix Never Expected”, DZone, Kendrick Wang, January 4th 2016

[9] “Premium Leading media companies in 2017, based on revenue (in billion euros)”, Statista